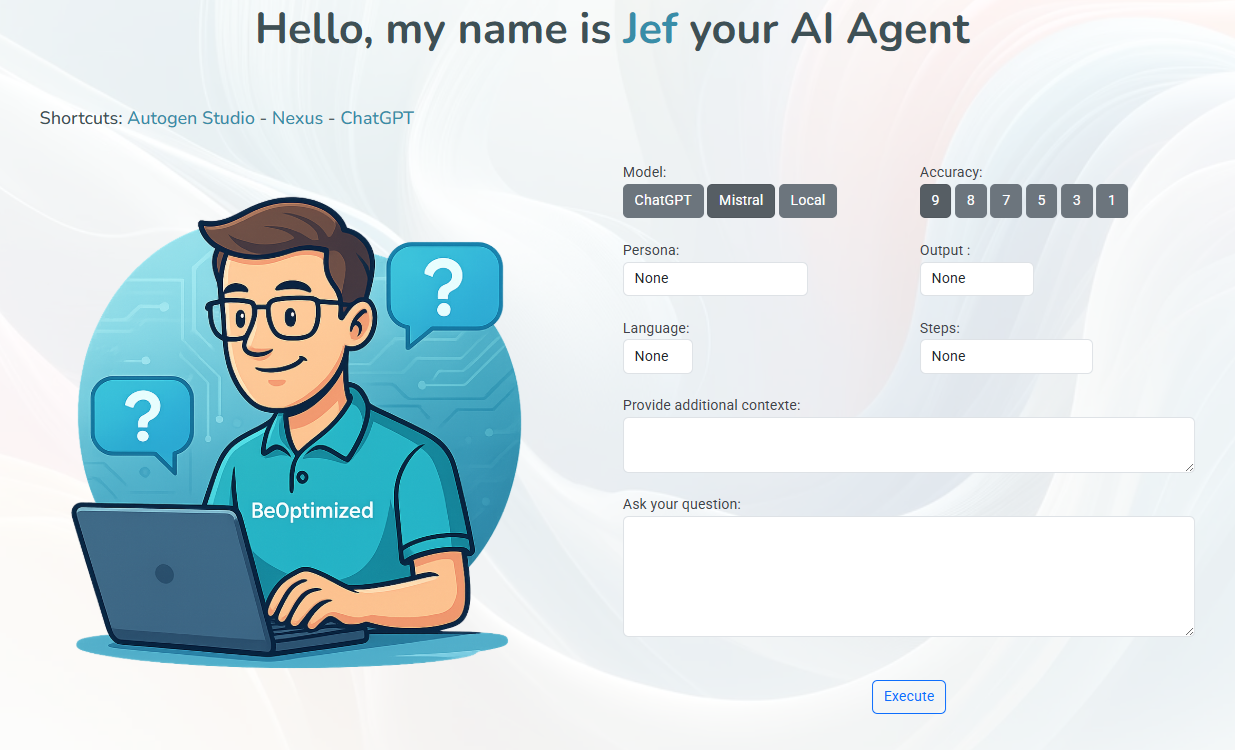

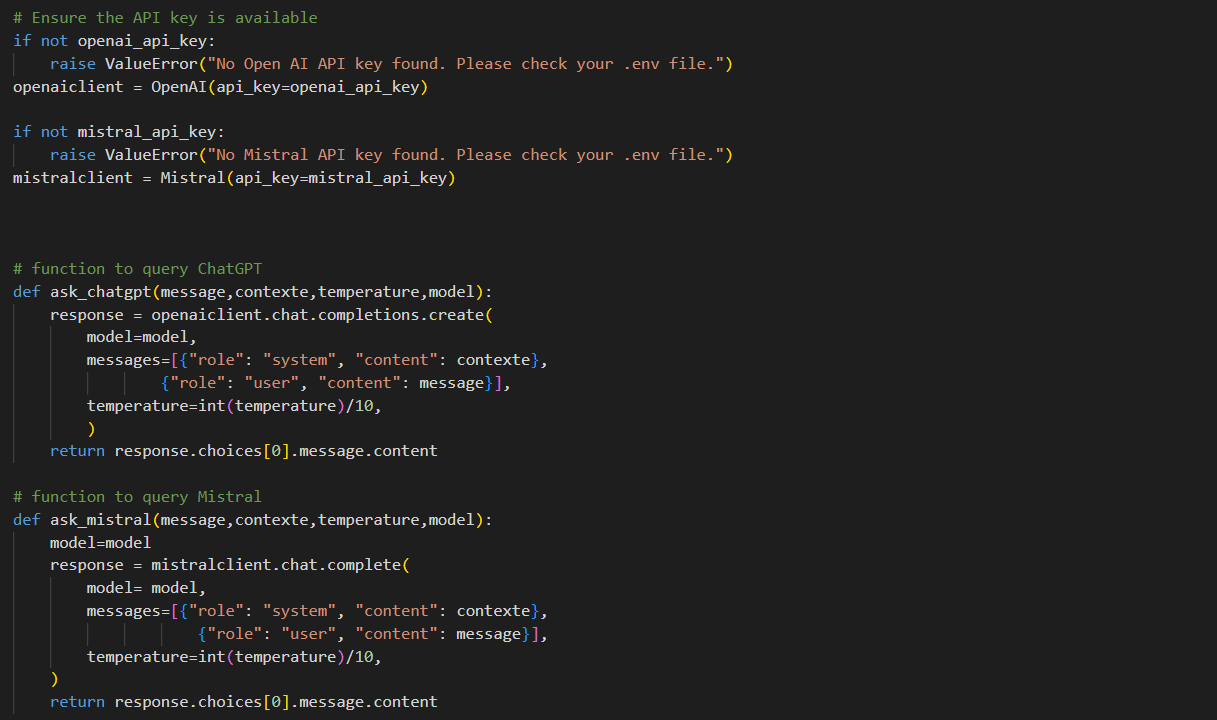

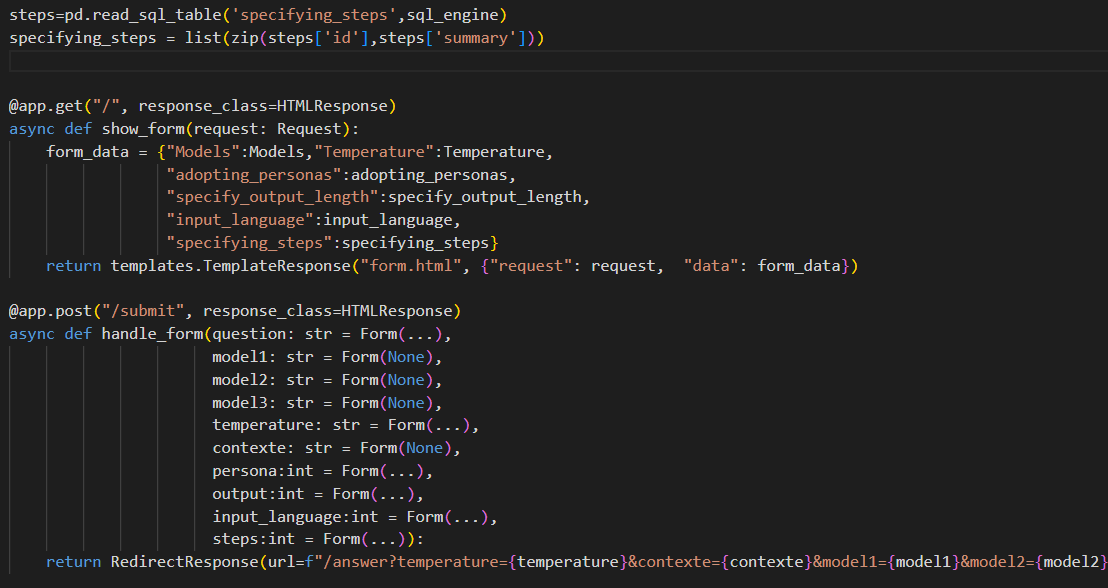

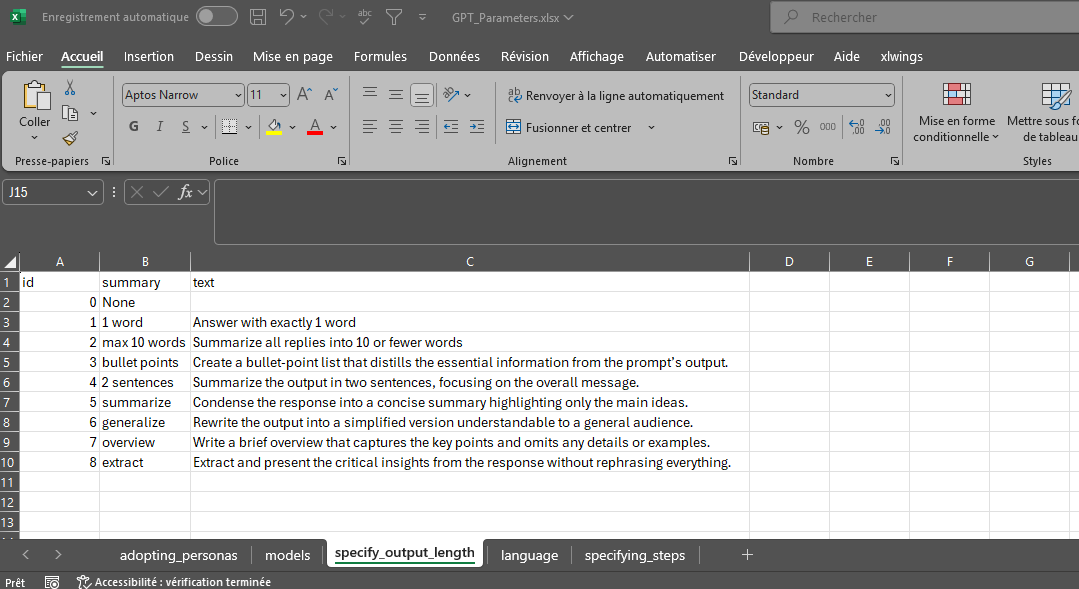

Jef is a FastAPI web app acting as a 'layer' between AI tools like OpenAI and BeOptimized collaborators. This layer helps users by providing custom context and calling several LLMs to give multiple points of view. Custom contexts are stored in Excel and queries are stored in a SQLite DB for monitoring purposes. The reason? At BeOptimized, we love GenAI, but what happens when we use ChatGPT with its default UI? We start a conversation with an Agent, refining the question step by step until we get a final answer. Basically, it always takes 2 - 3 steps to get the expected answer because the LLM asks us to refine the context to clarify the question. Wouldn't it be faster to ask a single question with the right context from the start? That’s precisely what this web app does: before submitting the question, we use dropdown lists with predefined context, and when the call is made, the context is automatically added to the question. As a result, the LLM provides the best answer directly, and we don’t waste time rewriting the context. This layer is also important because companies don’t have control over the information transmitted to these tools. Here, Jef monitors what is sent to the LLM so analysis can be done later to ensure no confidential data has been transmitted. Finally, everyone says ChatGPT is the best LLM. Are you sure about that? Have you compared ChatGPT with the open-source Mistral? Or even with a model running on your own server (where you could safely send confidential data)? Behind the scenes, a Python function with parameters was created and exposed using FAST API. This function dynamically recreates the prompt context using the input parameters, then makes the call and stores the information in a table. The API runs in a container on a NAS server, with a mounted volume used to store the Excel parameters. This way, the function is always available, can be reused for several purposes and the parameters easily accessible from Windows. This project was made possible thanks to three books I highly recommend: AI Agents in Action (Michael Lanham) , Unlocking Data with Generative AI and RAG (Keith Bourne) , and Building Python Web APIs with FastAPI (Abdulsazeed Abdulsazeed Adeshina) This AI Agent is able to perform several tasks:

This web page is a LLM context prompt designer. It contains couple of dropdowns lists allowing you to create a custom and accurate prompt context so that the best answer is provided directly by the LLM.

No conversation is needed to refine the context — you provide it directly.

You want to switch your LLM model or use a local one or even compare answers of different LLM? No problem, this can be done with a simple click.

Have a look at the video below to see how it works :-)

In this video, the project is briefly described and prompts are made using chatGPT, Mistral and a local LLM.

Question:

Answer:

Answer:

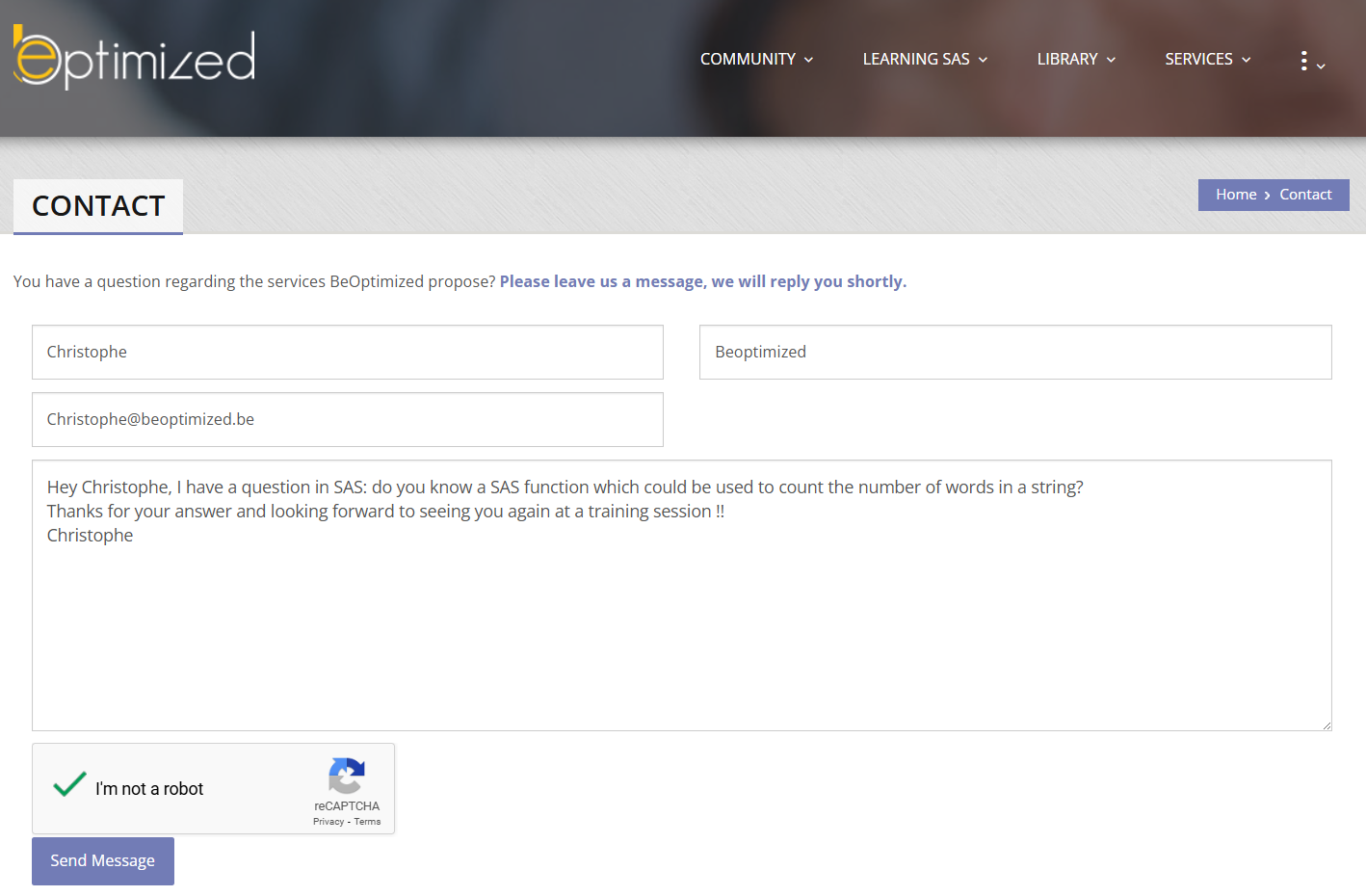

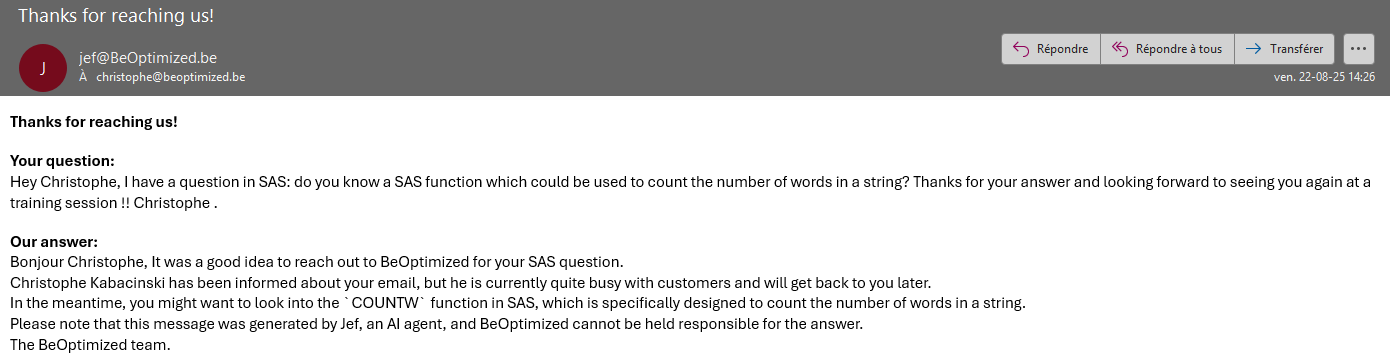

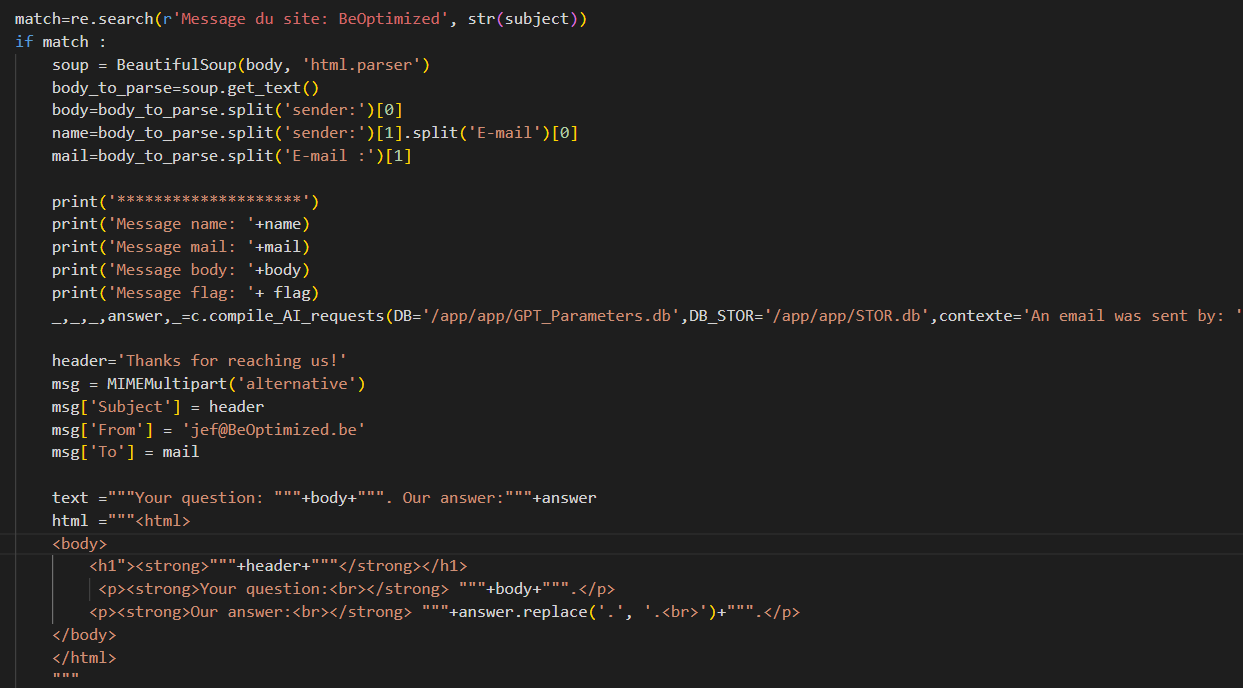

Automatic answers have existed since the beginning of the internet... But Jef’s answers are not basic at all because: first, Jef answers your question in your own language, then Jef provides an intelligent answer with correct syntax if possible, and finally Jef indicates that it is just an AI and a human will answer shortly. For the example shown, I didn’t even have to answer because the `COUNTW` function in SAS was the expected response :-) Behind the scenes, the website was not modified:

In this demo, a question is asked from the web site and answered by Jef

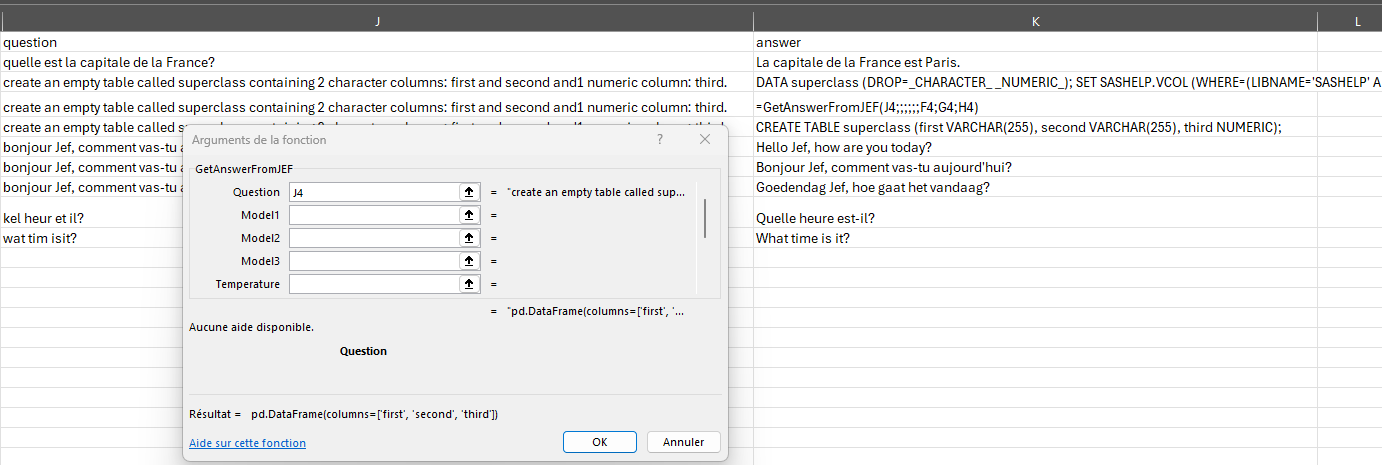

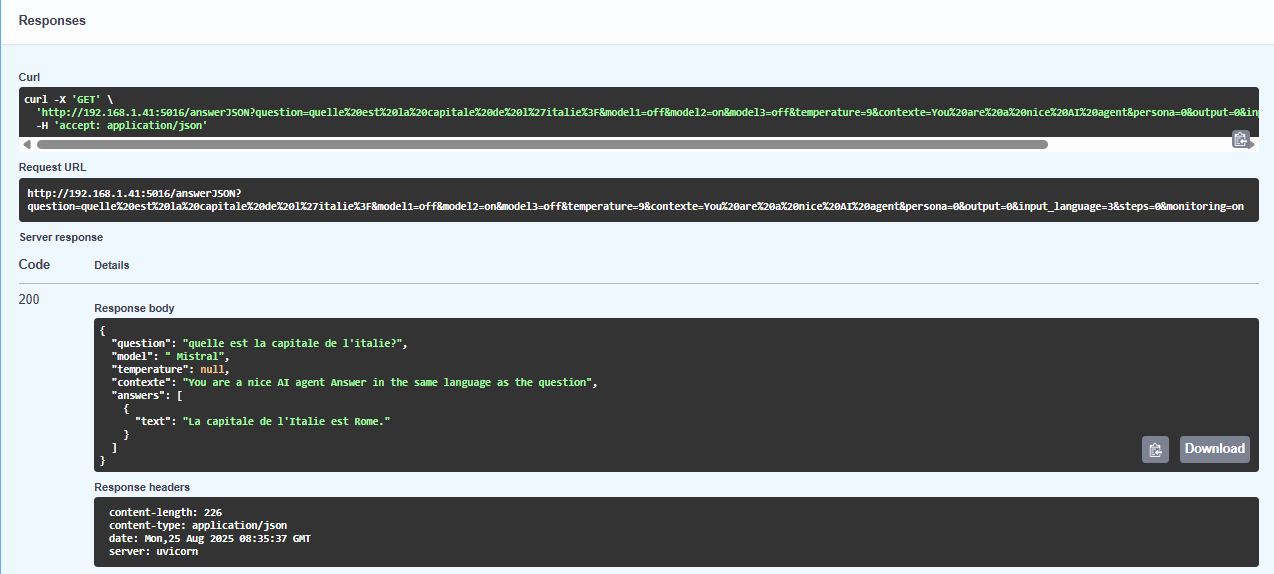

Jef is running in a Docker container on a NAS server. It was built using FastAPI and is available 24/7 on the local network. It can be accessed from a web browser as well as from other applications, such as MS Excel or any tool that can make HTTP requests.

This video starts with the FastAPI documentation then the URL is used in SAS and Excel.

By continuing to browse the site you are agreeing to our use of cookies. Review our cookies information for more details.