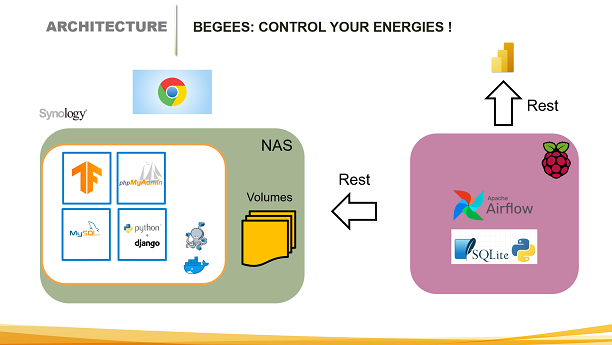

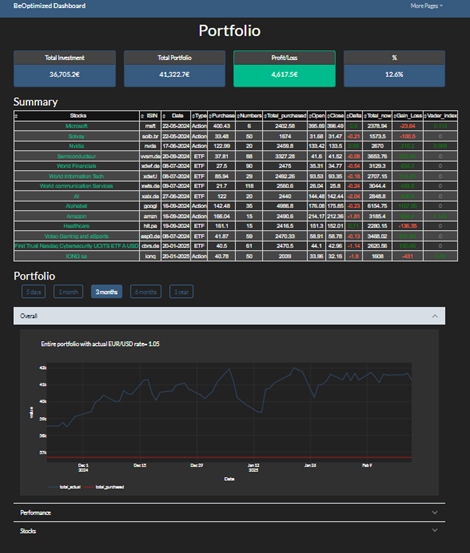

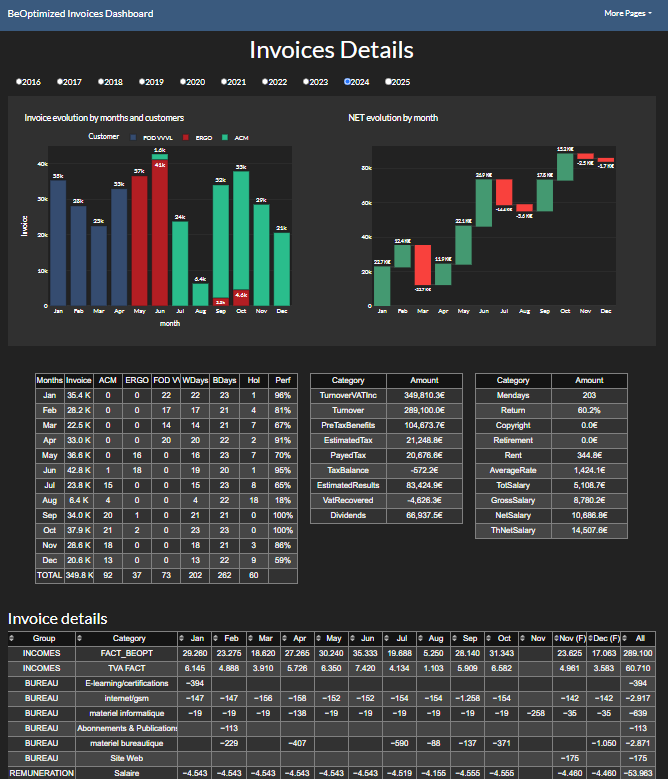

In this project, called BeGees, we are monitoring BeOptimized's energy consumption and production in real time.

This project utilizes several open-source packages and covers various aspects of the data lifecycle: data capture, data quality, data transfer, data storage, and data presentation.

Energy data is captured through a Raspberry Pi connected to a smart meter, and the data is sent to a database via the network.

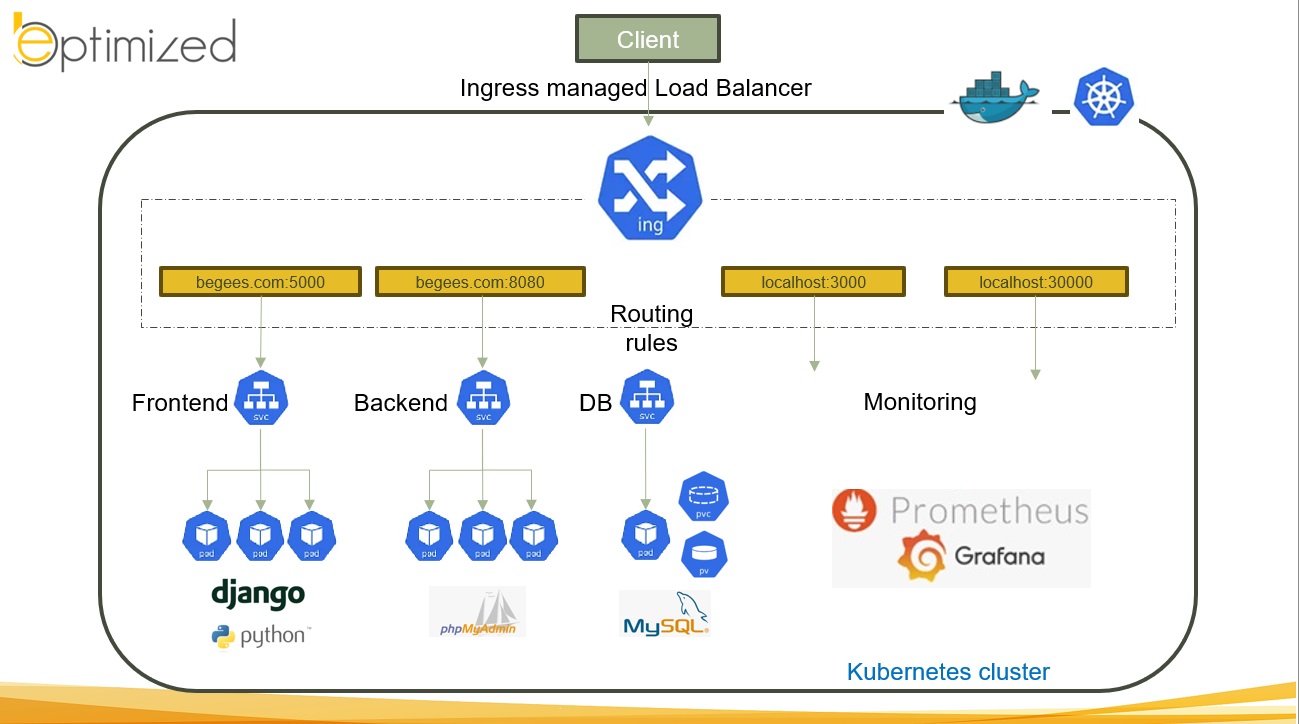

To make this work, all the packages and the database have been converted into containers, orchestrated via Docker Compose.

The containers and Docker are run on a NAS server and mounted to my desktop folders.