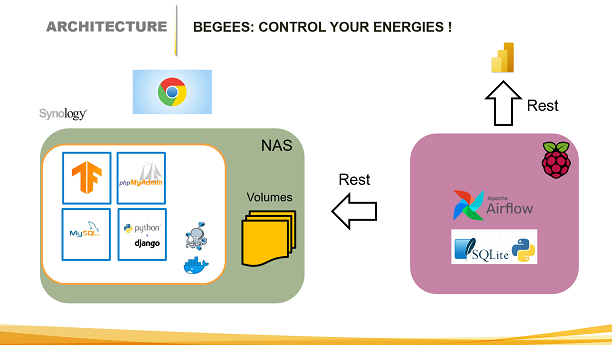

Begees is an internal BeOptimized project focused on the understanding of the energy consumption and production at BeOptimized. This project uses several open-source packages and covered various aspects of the data lifecycle: the data capture, data quality, data transfert, data storage and data presentation. Energy data are captured thanks a to a Raspberry Pi connected to a smart metter and data are sent to a database via network. To make it working, all the packages & the database have been converted into containers orchestrate via Docker-Compose. The containers and Docker are run on a NAS server and mounted to my Deskop folders. More preciselly:

This project will continue to evolve in the futur, we could indeed analyse the time series to detect unusual patterns, analyse devices signatures, make some trends, analyse electric car consumption, generate SMS when there is a gaz leakage or a consumption peak or even when the electritity is down ...

On the Raspberry, we could also replace the SQlite/Airflow by a Docker image in order to package the solution and install it more easily. We could also put the existing containers into the Cloud and

replace Docker-Compose by Kubernetes...

In this video I briefly explain which python library was used to make the connection to the smart-meter. I also explain how I did the data extraction and storage.

In a second phase I describe what I did to push the information to Power BI in order to display the information in real-time.

Crontab was firstly used in the project but when I saw the GUI and simplicity of Airflow I couldn't resist anymore :-).

In this video, I cover the Dockerfile, volume setup, and how I configured the docker-compose.yml file. I also show how I deploy and run the container on my local Synology NAS server using a few Linux commands.

This application consists of four interconnected containers:Here I explain how I use Tensorflow to read index, how I store the pictures successfully processed or not and push them to the Django RestAPI

Django is the central piece of the puzzle because it is used to collect the dataflow through the RestAPI framework and it is used to display the reports and graphics in the browser. In that video I start with PhpMyadmin to display the data tables stored in the MySQL DB, then I explain briefly that Django created these tables thanks to classes in the model.py Django is also practical because it makes a separation between the Python coders and the HTML designers.

By continuing to browse the site you are agreeing to our use of cookies. Review our cookies information for more details.